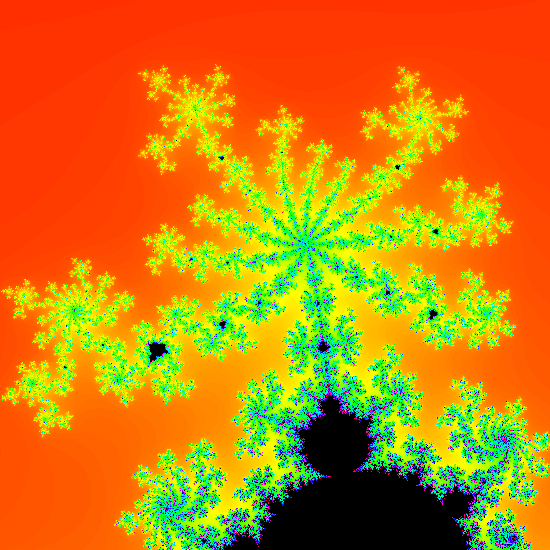

In this series we’re going to build an app to view the Mandelbrot set. While this is not necessarily something that is likely to be of direct use to the majority of app developers (except for those that have a desire to develop their own Mandelbrot set viewing apps), there are some techniques that we’ll explore along the way that most certainly will be of relevance to many.

Previously we actually rendered the Mandelbrot set in an app , bot the image we generated was static and didn’t allow the user to actually explore the Mandelbrot set. This restriction actually meant that there was no reason whatsoever to generate the image on the fly. But we’re not going to stop there. In this article we’ll add zoom support to allow the user to properly explore.

We’ll start by adding gesture handling to our ImageView. To allow delegation of this functionality, we’ll first introduce a TouchHandler interface:

class MandelbrotView @JvmOverloads constructor(

context: Context,

attrs: AttributeSet? = null,

defaultThemeAttr: Int = -1

) : AppCompatImageView(context, attrs, defaultThemeAttr) {

var delegate: TouchHandler? = null

@SuppressLint("ClickableViewAccessibility")

override fun onTouchEvent(event: MotionEvent): Boolean {

delegate?.onTouchEvent(event)

return true

}

}

We”l keep things simple, and defer the logic for handling touch events to an implementation of TouchHandler. This will require a minor tweak to our layout:

The next thing we need to do is actually simplify our MandelbrotLifecycleObserver, as we’re going to move some logic out of there as well:

class MandelbrotLifecycleObserver(

context: Context,

lifecycleOwner: LifecycleOwner,

imageView: MandelbrotView

) : CoroutineScope, LifecycleObserver {

private val job = Job()

override val coroutineContext: CoroutineContext

get() = job + Dispatchers.Main

private val renderer: MandelbrotRenderer = MandelbrotRenderer(context)

private val viewportDelegate: ViewportDelegate

init {

lifecycleOwner.lifecycle.addObserver(this)

viewportDelegate = ViewportDelegate(context, coroutineContext, imageView, renderer)

}

@OnLifecycleEvent(Lifecycle.Event.ON_DESTROY)

@Suppress("Unused")

fun onDestroy() {

job.cancel()

renderer.destroy()

}

}

The responsibility for responding to changes in size of the ImageView (or, more correctly, the MandelbrotView that we just created), and triggering the image rendering is now handled by the new ViewportDelegate which we’ll look in a moment.

Next we have a utility class that will be used by ViewportDelegate:

internal class RectD {

var left: Double = 0.0

private set

var right: Double = 0.0

private set

var top: Double = 0.0

private set

var bottom: Double = 0.0

private set

constructor() : this(0.0, 0.0, 0.0, 0.0)

constructor(l: Double, t: Double, r: Double, b: Double) {

set(l, t, r, b)

}

fun set(l: Double, t: Double, r: Double, b: Double) {

left = l

top = t

right = r

bottom = b

}

val width: Double

get() = right - left

val height: Double

get() = bottom - top

}

This is a simple copy of some limited functionality from the Android Framework RectF class, only it used Double values instead of Float. Now we come to ViewportDelegate which is the main workhorse. The fundamental concept behind this is that we will create a view port on to the Mandelbrot set. Up until now the view port has covered the entire set and is of a fixed size. The view port can never go outside of these initial boundaries, but zoom in operations will make the view port smaller – showing a smaller section of the Mandelbrot set; and scroll operations will move the view port around.

The initial view port dimensions will represent to bounds of the area that the user can explore, but as the user zooms and pans, the view port will change to be a smaller section of this, but the aspect ratio will remain unchanged. Thus the aspect ratio of the view port will always match that of the ImageView itself.

The remainder of this article will focus on how we can translate pinch, double tap, and long tap gestures to alter the size of this viewport, effectively zooming in and out of the Mandelbrot set.

It is the view port area that will actually be rendered, and the ViewportDelegate is responsible for controlling the size and location of the view port. There are a number of different areas, so let’s look at them individually:

init {

imageView.apply {

delegate = this@ViewportDelegate

addOnLayoutChangeListener { _, l, t, r, b, lOld, tOld, rOld, bOld ->

if (r - l != rOld - lOld || b - t != bOld - tOld) {

viewPort.set(l.toDouble(), t.toDouble(), r.toDouble(), b.toDouble())

renderer.setSize(r - l, b - t)

renderImage()

}

}

}

}

override fun onTouchEvent(event: MotionEvent) {

scaleDetector.onTouchEvent(event)

gestureDetector.onTouchEvent(event)

}

In the init block we first set the delegate of the MandelbrotView so that it forwards touch events, and we also set the addOnChangeListener to reset the view port, calls setSize() on the renderer, and renders the image when the size of the ImageView changes.

Then we have the onTouchEvent which overrides the TouchHandler method. This will invoke both the ScaleDetector and GestureDetector implementations that we just created. These onTouchEvent() handlers will each return a Boolean indicating whether the event was handled or not. In some cases it is necessary to check these and only continue if the event was not handled. However, in this case there are times when touch events may indicate both scale and scroll and we want to be able to handle both at the same time, so we always allow both detectors to do their stuff.

The next set of methods handle the callbacks from the OnScaleGestureListener:

override fun onScaleBegin(detector: ScaleGestureDetector): Boolean = true

override fun onScale(detector: ScaleGestureDetector): Boolean {

scale(

detector.focusX.toDouble(),

detector.focusY.toDouble(),

detector.scaleFactor.toDouble()

)

return true

}

private fun scale(focusX: Double, focusY: Double, factor: Double) {

val newViewportWidth = viewPort.width / factor

val newViewportHeight = viewPort.height / factor

val xFraction = focusX / imageView.width.toDouble()

val yFraction = focusY / imageView.height.toDouble()

val newLeft =

viewPort.left + viewPort.width * xFraction - newViewportWidth * xFraction

val newTop =

viewPort.top + viewPort.height * yFraction - newViewportHeight * yFraction

updateViewport(newLeft, newTop, newViewportWidth, newViewportHeight)

renderImage()

}

override fun onScaleEnd(detector: ScaleGestureDetector) { /* NO-OP */ }

The only one worthy of explanation is the onScale() callback which will be received whenever a scale operation (i.e. the user is doing a pinch-to-zoom). This passes some parameters from the detector to the scale() method, which will be used elsewhere, hence having a separate method here. these parameters are focusX and focusY which represent the centre point between the users’ fingers for the pinch, and the scaleFactor which represents how much the scale has changed since the last time this callback was made.

In the scale() method we first calculate a new width and height of the view port by multiplying the existing width & height of the view port by the scale factor. Next we calculate the relative offset of the focus point as a fraction of the overall width & height of the ImageView. This is necessary because the focusX and focusY values are with relative to the ImageView and we need to work out where this point would fall within the current view port. We then use this to calculate the new left and top positions of the view port. We are effectively adjusting the left and top offsets of the view port so that the scaling is centred around the focus point within the view port – this makes it feel like the scaling is happening around the user’s gesture. Finally we call updateViewport to update the view port dimensions with these new values, and then render a new image for this updated view port – we’ll take a look at the mechanics of this in the next article.

Next we have the SimpleOnGestureListener overrides:

override fun onDoubleTap(e: MotionEvent): Boolean {

scale(e.x.toDouble(), e.y.toDouble(), 2.0)

return true

}

override fun onLongPress(e: MotionEvent?) {

updateViewport(0.0, 0.0, imageView.width.toDouble(), imageView.height.toDouble())

renderImage()

}

The onDoubleTap() method enables us to provide a quick zoom option whenever the user double taps on the screen. It utilises the scroll() method that we just looked at by zooming in by a factor of 2 around the point where the double tap was detected. Now the reason for abstracting the scale() method should be obvious – it saves us from having to duplicate this logic.

The OnLongPress() method will reset the view port back to the start position. This can be useful if the user gets lost while exploring and just wants to reset back to the start point. It calls updateViewport() with the zero offsets and the dimensions of the ImageView – which is the start position – and then renders the image.

That covers how we resize the view port, but we also need to allow the user to scroll around as well. In the next article we’ll cover that, and then apply the changes to the view port to the actual image rendering which will allow the user to properly explore the Mandelbrot set.

Usually I like to publish working code along with each article, but in this case there was simply too much to squeeze in to a single post (believe me, I tried – this article alone is already pretty big). So there’s no code available for this article. However in the next article we’ll finish getting the pan & zoom functionality working and the code will be published along with that.

© 2019, Mark Allison. All rights reserved.

Copyright © 2019 Styling Android. All Rights Reserved.

Information about how to reuse or republish this work may be available at http://blog.stylingandroid.com/license-information.